Who does the public hold accountable in the age of mass misinformation? What is being done now to deal with the problem? How can it be done better?

Fake news is a hot topic. Big names and big businesses have been implicated, and topics of blame and responsibility have risen to the forefront. While some remark that fake news is nothing new, fake news in the modern socio-political moment, accompanied and strengthened by the rapidly globalizing and technologizing landscapes, is indeed something new.

In the summer of 2017, I had the incredible and challenging opportunity of conducting my first ever research project, focused on pressing, fake news-related questions. With funding from the inaugural Andrew Kohut fellowship and with the patient and helpful guidance of Professor Peter Enns, I used the Roper Center’s iPoll database to explore my ideas. What these surveys hinted at was alarming, and it became clear how crucial it was to explore the topic at a deeper level and to brainstorm ways this research could materialize into a productive contribution. Using data sourced from Roper, this project focuses on accountability, user confidence, and how public opinion polling can drive more effective fake news intervention efforts.

Ultimately, the research I conducted this summer leads me to suggest that:

- There is evidence that American people do want media and technology corporations held accountable when they facilitate the spread of deceptive news—but these opinions are somewhat malleable depending on how poll questions are framed for respondents.

- Overconfidence effects are at play. Americans, on the individual level, do not believe that they themselves are especially vulnerable to fake news. These poll results must be taken very seriously by any initiatives devoted to combating fake news and bolstering media literacy.

What is fake news?

Defining fake news is more challenging than it may seem on the outset, perhaps due to how frequently the term is thrown around and the range of different meanings it holds for different subsets of the population. I limited fake news to mean factually inaccurate news articles, spread online through mass media and social networks with the assistance of advertisement generators, and unknowing (or perhaps knowing) netizens. The role of ad generators is quite significant. It is important to keep in mind that there is a large financial incentive component (in addition to and aside from socio-political incentives) driving the curation of fake news. References to fake news in this writing focus primarily on the type of articles that are not based in fact and spread by irreputable sources. Well-known examples of what I typify as fake news include the article that asserted the Pope endorsed Donald Trump, or pieces related to the Pizzagate scandal, which, using fake news websites, among other platforms, gained immense traction in spreading misinformation. When considering respondents and the choices they select when answering a public opinion survey poll, it is crucial to keep in mind that determining a definition for fake news is a complicated process, and members of the general public answering these questions may have different interpretations.

How do Americans really feel about fake news?

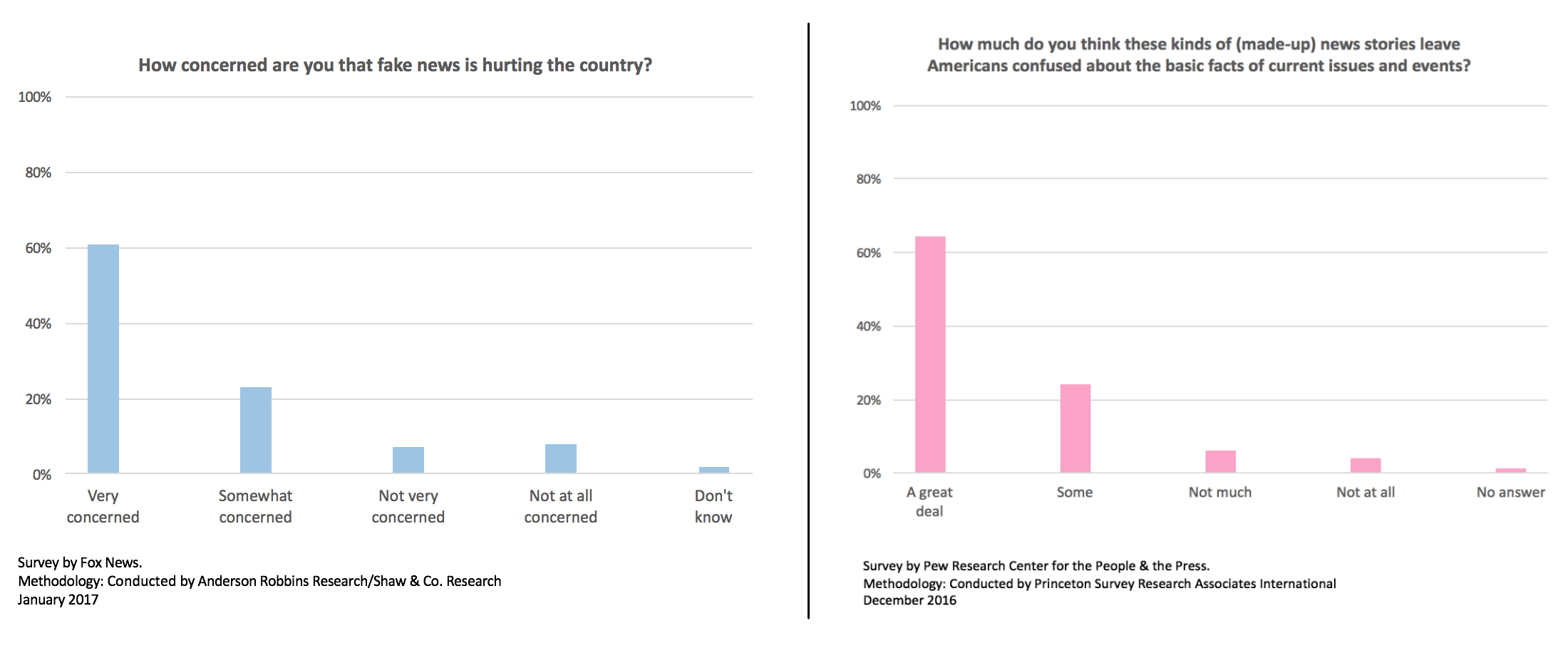

Large numbers of Americans are perturbed by the traction that mass misinformation has garnered. The majority of those surveyed believe that factually inaccurate reporting is hurting the country and leaving Americans confused.

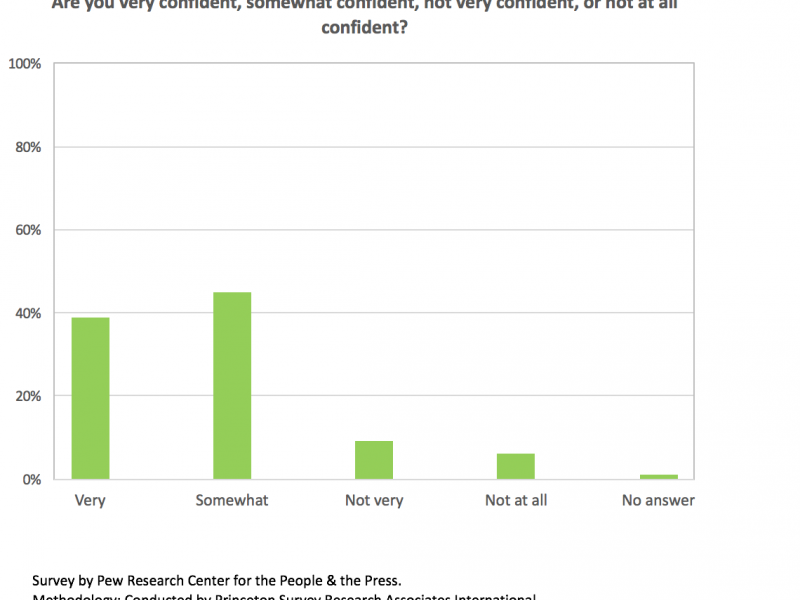

While these may seem like obvious assertions, it is eye-opening to access the numbers behind these statements. Some of the first questions this project explored were: What do Americans really think of fake news? And exactly how many of them think what? Working with actual numbers in Roper’s iPoll helped to clarify that a substantial majority of Americans (84%) are anywhere from “somewhat” to “very” concerned that “fake news is hurting the country,” and a similar majority (88%) think that “made-up news stories leave Americans confused about the basic facts of current issues and events.” Those with the strongest beliefs, who are “a great deal” or “very” concerned, made up over 60% of the results in each poll.

Let’s take a look:

How does the American public want fake news to be dealt with? Are social media and technology corporations to blame?

After establishing that fake news was a problem that a majority of Americans were concerned about, I set out to explore the topic of accountability. Specifically, this project focused on accusations against large technology and social network corporations for facilitating the spread of mass misinformation.

Sixty percent of respondents in a February 2017 poll conducted by the Associated Press marked “yes” when asked if they ever got their news from Facebook. Given that a sizable chunk of the American population does get at least some of its news through online media and technology websites, the conversation surrounding the responsibilities of these platforms has become increasingly salient in the wake of election-related mass misinformation. Twitter, Facebook, and Google have been brought to the forefront, and their leaders have been called on to speak out.

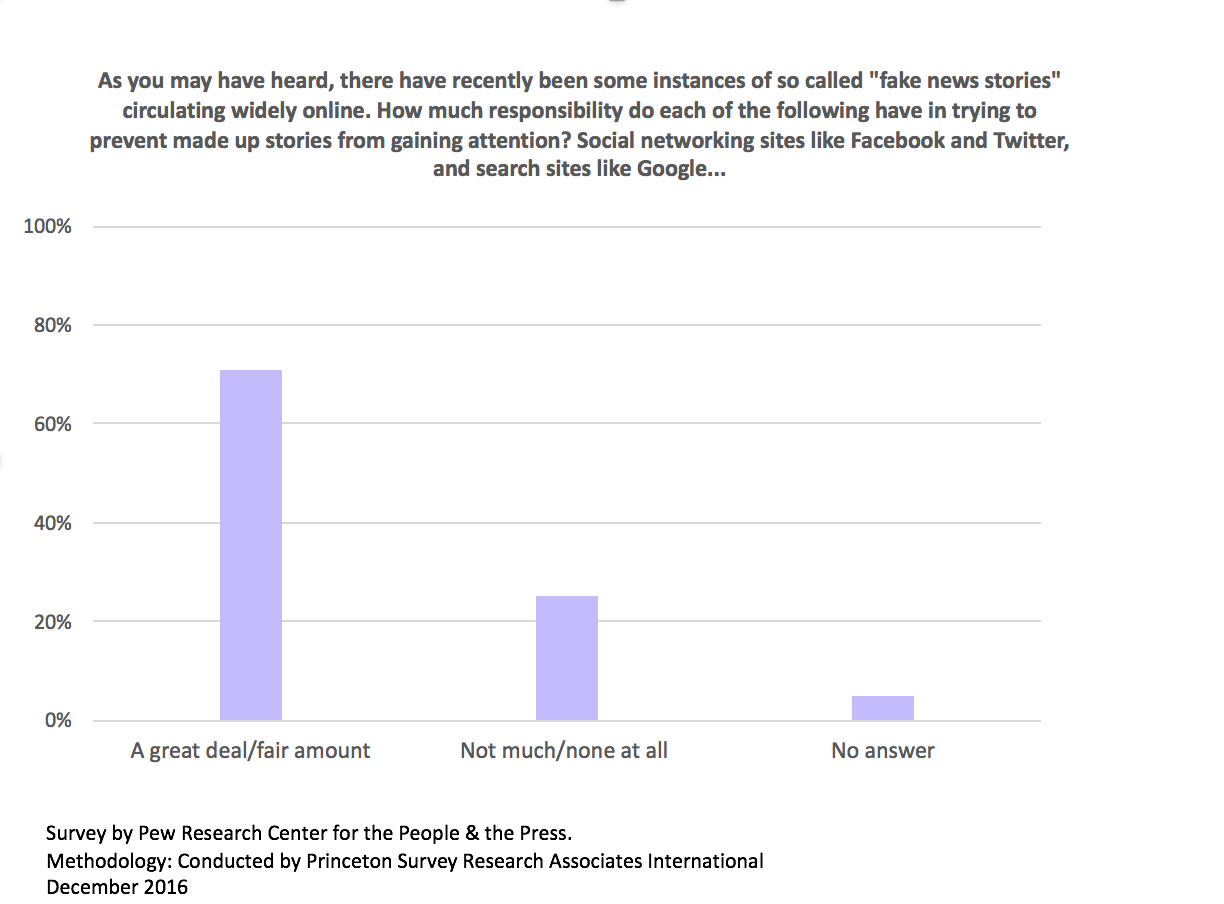

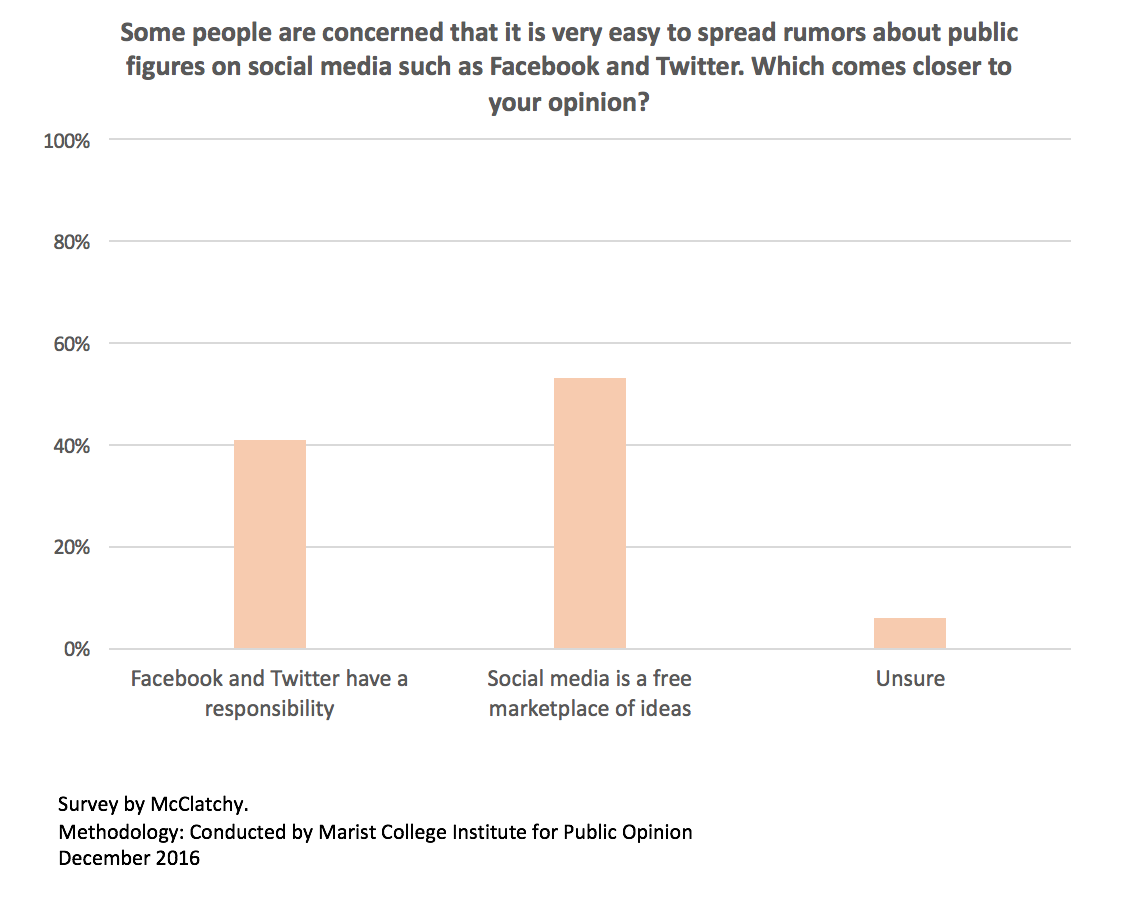

Through public opinion data, I hoped to better understand how the American people at large (rather than just academics, journalists, activists, etc.) felt about holding these corporations accountable. Polls suggest that indeed, people do want media and tech businesses to take responsibility and facilitate prevention methods. This request for responsibility, however, becomes less staunch when polling prompts include language in support of free-market ideals.

When wording is altered to prime free market ideals, respondents choose a little differently. A McClatchy/Marist poll presents this phenomenon with the following question:

These polls showcase some interesting findings. First off, they show that the opinion of a notable portion of the public sways to the side of holding big media and tech businesses accountable. However, in the second poll, the percentage of respondents who desire sites like Facebook and Twitter to take responsibility drops to around 30%. This disparity can explain itself in various different ways.

One conclusion is that Americans do not have fully formed perspectives of corporate social responsibility in the context of dealing with misinformation. This is why in one public opinion poll, over 70% of Americans believed that networking and search sites hold a “fair amount” or a “great deal” of responsibility, and in another poll, less than a majority, only around 40%, held the opinion that “Facebook and Twitter have a responsibility to stop the sharing of information that is identified as false.”

However, a reader could also think about things in this different way. Even when McClatchy/Marist positions the option to hold these businesses accountable as counter to “free market” thinking, a very sizable portion of slightly over 40% of respondents still believe that these networking platforms have a responsibility. A question phrased to frame the misinformation topic in terms of the country’s economic system of choice still sparks substantially divided perspectives on the fake news dilemma.

Some public-opinion based insights for fake news-combating initiatives

One especially interesting finding in iPoll showed that despite all of the attention generated on the topic of fake news and its negative impacts, people are quite confident in their own abilities to detect fake news. This confidence is not justified by competence—a significant finding for any initiatives geared toward improving media literacy and combating misinformation.

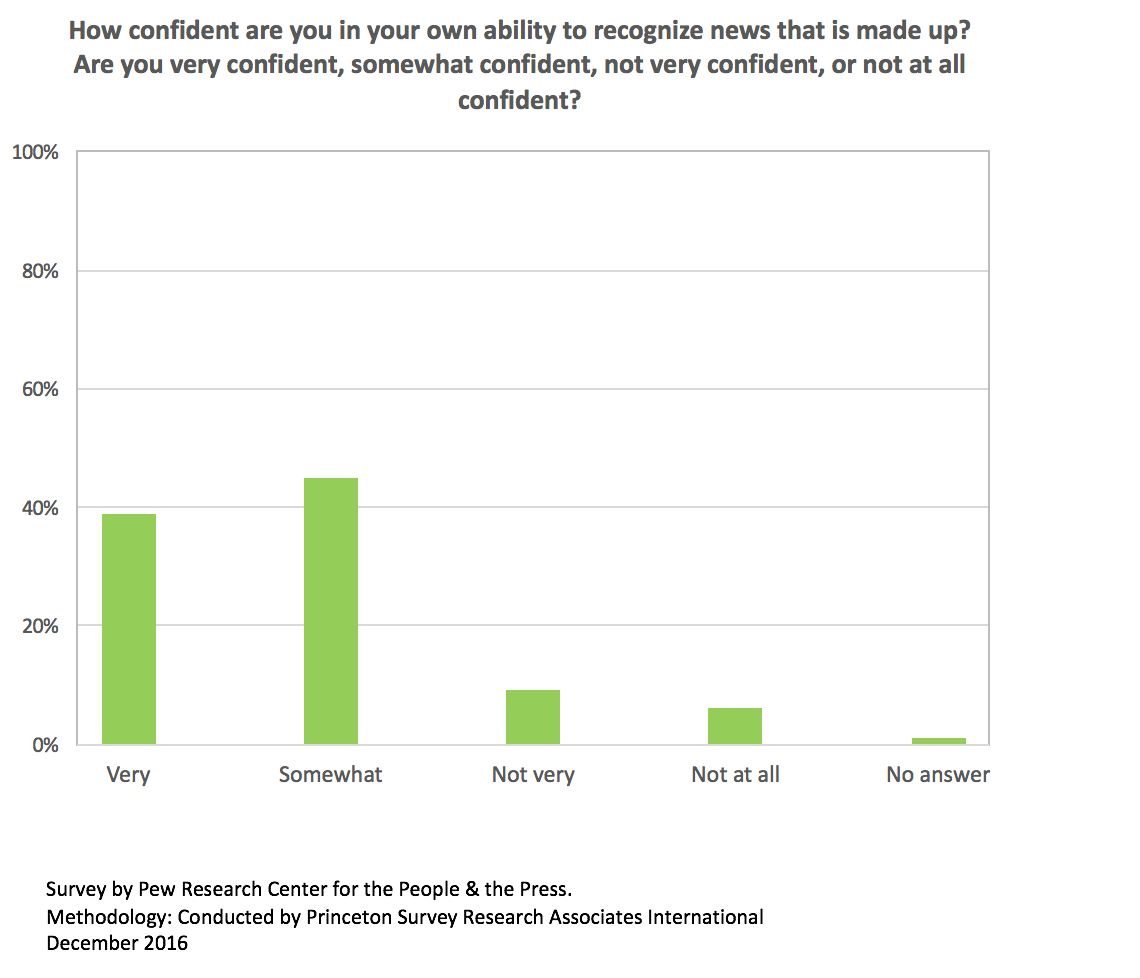

The fake news problem implicates many actors and conceptions of responsibility. It establishes that a majority of Americans are worried about fake news, and assign to online networking sites and search engines the duty of taking preventative action. Public opinion also presents the somewhat frightening, somewhat amusing belief held by a majority of respondents. That is the confident belief in one’s own ability to detect fake from authentic news. A glance at this Pew 2016 poll shows us the numbers:

Here, an overwhelming majority of 84% of survey respondents have selected either “very” or “somewhat” confident when characterizing their own ability to tell real news from fake news, and around 15% indicated that they are “not very” or “not at all” confident.

This self-perceived competence is noteworthy because studies have demonstrated that despite the confidence many hold in their ability to discern between real and fake news, Americans are quite incapable of thoroughly detecting false information and untrustworthy news sources. For example, Clay Calvert contributed an article to the Huffington Post, aptly titled “Fake News, Censorship & the Third-Person Effect: You Can’t Fool Me, Only Others!” and Brett Edkins wrote a Forbes piece with the headline “Americans Believe They Can Detect Fake News. Studies Show They Can’t.” Both authors conclude or hint to solutions of the problem with emphasis on the importance of educating the public on quality news consumption and/or holding social media accountable.

I suggest that this pairing of incompetence and overconfidence may dilute the effectiveness of solutions to fake news—especially when a vast number of initiatives designed to combat the fake news problem depend on overconfident users’ participation. Programs need to specifically address overconfidence effects in order to be productive on a large scale. When people are relatively content with their ability to do something, they typically find themselves less motivated to spend time, energy, and money improving, especially if they consider themselves busy beings preoccupied with improving capabilities in domains they are less skilled in.

User overconfidence becomes a serious problem when considering the workshops, applications, software, and media literacy curricula dedicated to fighting the mass misinformation problem. Many programs rely on the potentially flawed notion that there exists an eager audience awaiting their new service or product. This is a crucial finding that must be acknowledged by Facebook, Twitter, Google, the 20 recipients of the Knight Foundation’s recent Prototype Fund focused on addressing the “spread of misinformation,” community and academic workshop curators, and to the rest of those devoted in some capacity to addressing to fake news. If success of an initiative depends on mass participation, for example, depending on users to download additional software on their phones and computers, to set aside time in their schedule to learn a lesson, or to contribute to reporting questionable articles, then the creators of said initiatives must confront the notion that their hard work may very well fall on deaf ears. Americans are worried about fake news, but that does not mean they will make an effort and take advantage of helpful resources in their own lives to assist with solving this problem—especially if they think they can manage competently with the status quo.

The questions we, as tech/education/media designers, responsible business people, and engaged citizens need to start asking are:

- How do we bolster the idea that fake news is not only threatening on the societal level, but on the individual level as well? In essence, how do we overcome overconfidence effects in the context of mass misinformation?

- How do we ensure that solutions are not only generated, but marketed compellingly?

Until we begin to thoroughly address these obstacles, well-intentioned and carefully thought-out efforts to combat fake news may falter. Public opinion shows us that we must motivate a substantial amount of citizens to become dedicated to improving their own media literacy in measurable, tangible ways.

Claire Liu is a junior pursuing a double major in Government and independent study, focused on persuasion and propaganda through the Arts and Sciences College Scholar program, and a minor in French. She was a Roper Center Kohut Fellow in the summer of 2017. To learn more about the Kohut Fellowship, click here.